How to Choose the Right QMS Software for Life Sciences

Quality management software is rarely selected in a vacuum.

Organizations typically begin evaluating QMS platforms after a meaningful inflection point. A regulatory inspection exposes gaps that were previously manageable. Growth introduces complexity the existing system cannot support. A merger forces multiple quality environments to coexist. In some cases, the trigger is less dramatic but just as consequential: the growing realization that maintaining compliance has become overly manual, reactive, and dependent on institutional knowledge rather than controlled systems.

What follows is practical, buyer-focused guidance on how to evaluate QMS software for life sciences, grounded in real decisions teams face and the tradeoffs that come with them.

This is not a checklist designed to validate a purchase already made. It is a framework for making an informed decision before technical debt, compliance risk, or organizational inertia narrow the available options.

Why QMS selection matters more than ever

The role of the quality management system has changed. Historically, many organizations treated QMS software as a document repository with workflow. As long as procedures were approved and training records existed, the system was considered sufficient.

That view no longer holds.

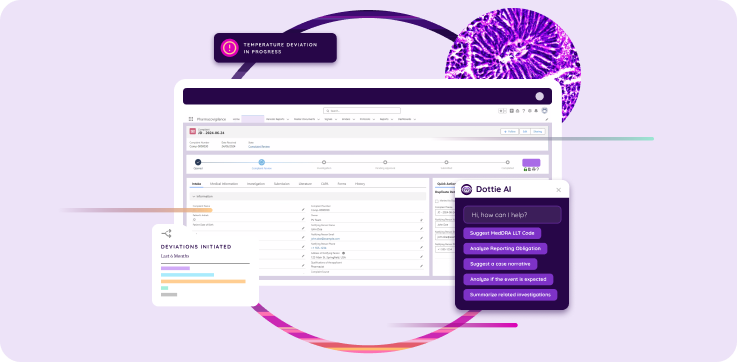

Regulatory expectations continue to evolve. Product portfolios expand. Global operations introduce variability. Data volume increases. At the same time, organizations are under pressure to move faster without compromising compliance. In this environment, the QMS is no longer a passive system of record. It becomes the operational backbone for how quality is executed, monitored, and defended.

Selecting the wrong system does not typically fail immediately. It fails gradually.

Teams compensate with manual workarounds. Validation effort increases. Reporting becomes slower. Cross-functional visibility declines. Over time, the system becomes something the organization works around rather than relies on.

Choosing well at the outset avoids these patterns.

Start with the problem you are actually trying to solve

One of the most common mistakes in QMS selection is beginning with features rather than outcomes.

Organizations often approach evaluations with a list of required modules: document control, training, deviations, CAPA, change management, supplier quality. These are necessary components, but they are not differentiators. Nearly every modern QMS vendor can demonstrate them.

A more productive starting point is to articulate what is not working today and why.

For example:

- Are audits difficult because evidence is fragmented across systems?

- Are deviations taking too long to close because investigations lack context?

- Does training feel disconnected from procedural change?

- Is reporting slow because data must be exported and reconciled manually?

- Are quality decisions difficult to defend because rationale is not traceable?

These issues point to structural gaps rather than missing features.

A strong QMS addresses how information flows across processes, not just whether workflows exist.

Understand the regulatory foundation the system must support

In life sciences, compliance is not optional, but interpretation varies.

A QMS must support applicable regulations such as 21 CFR Part 11, ISO 13485, and regional requirements depending on product type and market.

Most vendors will claim alignment. Buyers should look beyond those claims and assess how compliance is operationalized.

Key questions to explore include:

- How are electronic records protected from unauthorized change?

- How are audit trails generated, stored, and reviewed?

- How does the system enforce role-based access?

- How are electronic signatures implemented and linked to intent?

- How does the system support validation activities over time?

The answers should be demonstrated within the software, not explained conceptually. A system that relies heavily on procedural controls to compensate for technical limitations shifts compliance burden back onto the organization.

Validation should be supported, not outsourced

Validation remains one of the most misunderstood aspects of QMS selection.

Some organizations assume that buying commercial software transfers validation responsibility to the vendor. Others underestimate the effort required to maintain validation as systems evolve.

In reality, validation is a shared responsibility. Vendors should provide documentation, test scripts, and guidance. Organizations remain accountable for ensuring the system functions as intended within their environment.

When evaluating QMS software, assess:

- Whether validation documentation is readily available and updated

- How system updates are managed and communicated

- Whether configuration changes can be validated incrementally

- How much effort is required to maintain validation year over year

A system that requires extensive revalidation for routine updates may slow adoption of improvements and increase long-term cost.

Consider how the system scales with your organization

Scalability is often discussed but rarely examined in concrete terms.

Growth affects quality systems in predictable ways. More users introduce complexity in access control. Additional products increase document volume and training requirements. New sites add variability. Mergers introduce parallel processes that must eventually converge.

When evaluating scalability, look beyond user counts and storage limits. Consider:

- How easily can workflows be adapted as processes mature?

- Can the system support multiple business units without duplication?

- Does reporting remain performant as data volume increases?

- Can the system integrate with other enterprise platforms?

A QMS that performs well for a small organization may struggle as complexity increases. Conversely, a system designed for large enterprises may be overly rigid for organizations still evolving their processes.

Integration is no longer optional

Quality does not operate in isolation.

Modern life sciences organizations rely on interconnected systems: ERP for materials and production, CRM for customer interactions, LIMS for laboratory data, and increasingly, platforms that span multiple functions.

A QMS should not require extensive custom development to exchange information with these systems. Integration capabilities should be native, well-documented, and actively supported.

Ask vendors to demonstrate:

- How data flows between systems in real time

- How master data is synchronized

- How changes in one system trigger quality actions in another

- How integrations are maintained during upgrades

Poor integration increases manual work and introduces risk. Strong integration supports timely decision-making and reduces reconciliation effort.

Evaluate the user experience honestly

Adoption matters.

A technically compliant system that users resist will not deliver the intended benefits. In many organizations, quality teams become system administrators simply because the software is difficult to use.

During evaluations, involve actual users, not just administrators. Observe how easily common tasks can be completed:

- Creating and revising documents

- Completing training

- Initiating deviations

- Reviewing and approving records

- Locating historical information

User experience should support consistency and reduce reliance on informal guidance. If the system requires extensive training to perform basic actions, it may introduce friction that undermines compliance rather than supporting it.

Reporting and visibility should be built in

One of the strongest indicators of QMS maturity is how easily organizations can answer basic questions about their quality performance.

For example:

- How many open CAPAs exist by risk level?

- Which suppliers present the highest quality risk?

- Where are investigations consistently delayed?

- How often are procedures updated without retraining impact?

If answering these questions requires exporting data into spreadsheets, visibility is limited.

A well-designed QMS surfaces this information directly through dashboards and reports.

When evaluating reporting capabilities, consider:

- Whether reports are configurable without custom code

- Whether data can be filtered across processes

- Whether trends are visible over time

- Whether reports are suitable for management review and inspections

Visibility supports proactive quality management. Without it, teams remain reactive.

Cost should be assessed over the system lifecycle

Initial licensing costs tell only part of the story.

Total cost of ownership includes implementation, validation, integration, configuration, ongoing support, and internal resource time.

Systems that appear cost-effective upfront may become expensive as complexity increases.

During evaluation, discuss:

- Implementation timelines and required resources

- Upgrade frequency and associated effort

- Support models and response times

- Costs associated with scaling users or modules

Predictability matters. Organizations benefit from cost structures that align with growth rather than penalize it.

Ask vendors how they evolve their product

Quality requirements do not stand still, and neither should the software that supports them.

A QMS vendor’s roadmap reveals whether the platform will remain aligned with regulatory expectations and industry practices. Ask about:

- Frequency of updates

- Approach to incorporating regulatory change

- Investment in innovation

- Customer feedback mechanisms

Vendors should demonstrate a track record of continuous improvement rather than relying on static feature sets.

Migration deserves its own discussion

Few organizations select QMS software without considering migration from an existing system.

Migration introduces risk if poorly managed, but it also presents an opportunity to reassess processes. Vendors should provide structured migration approaches, including data mapping, validation support, and phased transitions when appropriate.

Ask how migrations are handled:

- Can legacy data be preserved and accessed?

- Can systems run in parallel during transition?

- How is user adoption managed?

- What lessons have been learned from similar migrations?

A thoughtful migration plan reduces disruption and builds confidence.

Align the system with how quality actually operates

Perhaps the most important evaluation criterion is alignment with reality.

A QMS should reflect how quality work is performed, not an idealized version of it.

Systems that require significant process distortion to fit the software often fail to deliver value.

During demonstrations, challenge vendors with real scenarios. Ask them to show how the system handles exceptions, escalations, and change. Observe whether workflows feel natural or forced.

A system that aligns with actual operations supports consistency and resilience.

A practical way to compare QMS options

Once teams narrow their shortlist, side-by-side comparison becomes essential. High-level feature lists rarely reveal meaningful differences, so it helps to evaluate systems against criteria that affect long-term usability, compliance, and cost.

The table below outlines common evaluation areas and the questions buyers should be asking as they assess QMS platforms.

| Evaluation area | What to assess | Why it matters |

| Regulatory alignment | Support for 21 CFR Part 11, ISO 13485, and applicable regional requirements | Ensures the system can be defended during inspections without relying on workarounds |

| Validation support | Availability of validation documentation, test scripts, and update impact assessments | Reduces internal effort and risk as the system evolves |

| Architecture | Cloud-native design versus hosted legacy software | Impacts scalability, upgrade effort, and long-term maintenance |

| Integration | Native connectivity with ERP, CRM, LIMS, and other enterprise systems | Prevents data silos and manual reconciliation |

| Workflow flexibility | Ability to adapt processes without custom development | Supports growth, mergers, and evolving quality models |

| Reporting and visibility | Real-time dashboards and cross-process reporting | Enables proactive quality management and management review |

| User experience | Ease of use for day-to-day quality tasks | Drives adoption and consistent execution |

| Cost model | Licensing, implementation, validation, and scaling costs | Affects total cost of ownership over time |

| Vendor roadmap | Frequency of updates and investment in innovation | Determines whether the system keeps pace with regulatory and business change |

This type of structured comparison helps teams move beyond surface-level differences and focus on how each system will perform under real operating conditions.

Use a checklist to guide your evaluation

Selecting QMS software is not a decision teams make often, and the stakes are high. A structured evaluation approach helps ensure critical requirements are not overlooked and tradeoffs are considered deliberately rather than discovered later.

To support this process, we created a QMS software evaluation checklist for life sciences organizations. It is designed to help buyers assess platforms across compliance, validation, scalability, integration, and long-term fit.

Use the checklist to:

- Prepare internal evaluation criteria before vendor demos

- Compare systems consistently across stakeholders

- Identify potential risks early in the selection process

- Document decision rationale for leadership and audits

Download the checklist to assess your QMS readiness and guide your software evaluation.